The AI Community Is Demanding More Transparent Algorithms—Here’s Why

In this article

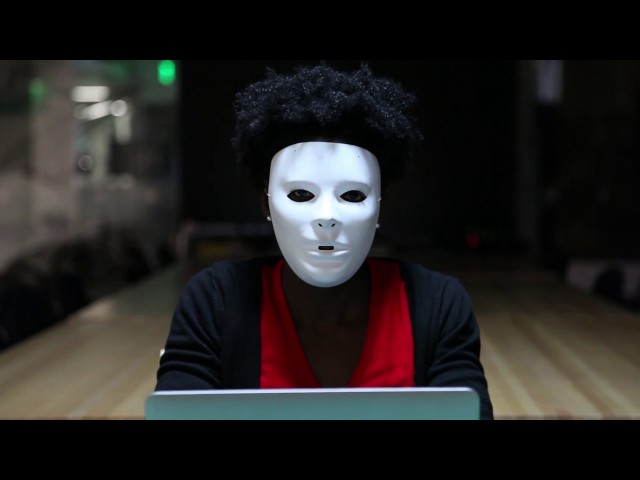

If you wanted to sum up racial bias in AI algorithms in one iconic moment, just take a few seconds of footage from the Netflix documentary Coded Bias. Minutes into the film, MIT researcher Joy Buolamwini discovers that the facial recognition software she installed on her computer wouldn’t recognize her dark-skinned face until she put on a white mask. Released in January 2020, the film introduced the ways algorithmic bias has infiltrated every aspect of our lives, from sorting resumes for job applications, allocating social services, deciding who sees advertisements for housing, and who is approved for a mortgage.

More importantly, the film served as a rude awakening to the notion that machines aren’t neutral, that AI bias is “a form of computationally imposed ideology, rather than an unfortunate rounding error,” writes Janus Rose, a senior editor at VICE. Worse still, many of these algorithms are “black boxes,” machine learning models whose inner workings are largely unknown even to their creators because of their immense complexity.

“I am thrilled that these documentaries are out there and people are talking about it because algorithmic literacy is another huge part of it,” said Alison Cossette, a data scientist at NPD group and a mentor at Springboard. “We need for the general public to understand what algorithms do and don’t do.”

What are black-box algorithms?

Allowing black-box algorithms to make major decisions on our behalf is a symptom of a larger problem: we mistakenly believe that big data yields reliable and objective truths if only we can extract them using machine learning tools—what Microsoft researcher Kate Crawford calls “data fundamentalism.”

Researchers at the University of Georgia discovered that people are more likely to trust answers generated by an algorithm than one from their fellow humans. The study also found that as the problems became more difficult, participants were more likely to rely on the advice of an algorithm than a crowdsourced evaluation.

These findings played out in the real world in May 2020, when numerous U.S. government officials relied on a pandemic-prediction model by the Federal Emergency Management Agency to decide when and how to lift stay-at-home orders and allow businesses to reopen, defying the counsel of the Centers for Disease Control and scores of health experts. In fact, that same model predicted in July 2019 that a nationwide pandemic would overwhelm hospitals and result in an economic shutdown.

Thanks to well-documented instances where algorithms outperformed humans, people have a tendency to overestimate AI capabilities just because machines can beat us at Jeopardy!, defeat world chess champions and transcribe audio with fewer mistakes than humans. But when it comes to tasks that require human judgment, common sense, and ethical sensibilities, the AI is exposed for what it really is: a series of mathematical equations that search for correlations between data points without inferring meaningful causal relationships.

“We interact with the real world and our survival depends on us understanding the world; AI does not,” said Hobson Lane, co-founder of Tangible AI and a Springboard mentor. “It just depends on being able to mimic the words coming out of a human’s mouth, and that’s a completely different type of knowledge about the world.”

From a survival standpoint, a world where algorithms curate our social media feeds, music playlists, and the ads we see doesn’t necessarily pose an immediate existential threat, but big decisions about people’s lives are increasingly made by software systems and algorithms.

“No matter what model you are building, someone is making either a policy or financial decision based upon that insight,” said Cosette. “Even if it’s a B2B situation or it’s internal, at some point it rolls out to societal impact.”

Get To Know Other Data Science Students

Jonas Cuadrado

Senior Data Scientist at Feedzai

Esme Gaisford

Senior Quantitative Data Analyst at Pandora

Bret Marshall

Software Engineer at Growers Edge

When can black-box algorithms become dangerous?

An overreliance on these algorithms can destroy people’s livelihoods, violate their privacy, and perpetuate racism. The potential for algorithms to perpetuate cycles of oppression by excluding certain groups on the basis of race, gender, and other protected attributes is known as technological redlining, derived from real estate redlining, the practice of racial segregation in housing.

In Coded Bias, a celebrated teacher was fired after receiving a low rating from an algorithmic assessment tool and a group of tenants in a predominantly black neighborhood in Brooklyn campaigned against their landlord after the installation of a facial recognition system in their building.

When it comes to the U.S. correctional system, algorithms turn sentencing decisions into a literal game of Russian roulette. Risk assessment models used in courtrooms across the country evaluate a defendant’s risk of reoffending, spitting out scores that prosecutors use to determine a defendant’s bond amount, the length of their sentence, the likelihood of parole, and their risk of recidivism upon release.

In 2016, investigative news outlet ProPublica obtained the risk scores of over 7,000 people arrested in Broward County, Florida, in 2013 and 2014 and checked to see how many were charged with new crimes over the next two years, the same benchmark used by creators of the algorithm. Not only were the scores unreliable–only 20% of the people predicted to commit violent crimes actually did so–but journalists uncovered significant racial disparities.

The formula was particularly likely to flag Black defendants as future criminals and incorrectly labeled them at almost twice the rate as white defendants. In fact, Black people were 77% more likely to be flagged as high risk of committing a future violent crime, and 45% more likely to commit a future crime of any kind.

Can model interpretability provide the answer?

The root of the evil is how hard it can be to understand how these machine learning algorithms arrive at certain computations. “Black box” machine learning models are created directly from the data by an algorithm, meaning that even the humans who design them cannot precisely interpret how the model makes predictions, other than observing the inputs and outputs. In other words, the actual decision process can be entirely opaque.

To better understand this, consider how a neural network functions. Algorithms are essentially a set of step-by-step instructions or operations that a computer carries out in order to make predictions. After the algorithm trains on a set of test data, it generates a new set of rules (a series of supplementary algorithms) based on the results of those tests. The process repeats again and again until the algorithm reaches its optimal state (i.e. the highest possible accuracy). However, it’s impossible to fully understand the relative weights a neural network assigns to all the different variables.

For example, let’s say you’re training a neural network to recognize images of sheep, so you train the algorithm on an image dataset of sheep on green pastures. The model might incorrectly assume that the green image pixels are a defining characteristic of sheep, and go on to classify any other object in a grassy setting as such.

Model interpretability enables data scientists to test the causality of the features, adjust the dataset and debug the model accordingly. For example, you can alter the data by adding images with different backgrounds or simply cropping out the background. Deep learning models are prone to learning the most discriminative features, even if they’re the wrong ones. In a production setting, a data scientist may be pressured to select the algorithm with the highest accuracy, even if the training data may have been problematic.

“When the drive is for accuracy, it’s not unthinkable that people are going to game the algorithm so that it looks good,” said Cossette. “If you’re in a Kaggle competition, you want the highest accuracy, and it doesn’t matter in reality if there’s some other sort of impact.”

Most machine learning models are not designed with interpretability constraints in mind. Instead, they are intended to be accurate predictors on a static dataset that may or may not accurately represent real-world conditions.

“Many people do not realize that the problem is often with the data, as opposed to what machine learning does with the data,” Rich Caruana, a senior researcher at Microsoft, said at a 2017 annual meeting of the American Association for the Advancement of Science. “It depends on what you are doing with the model and whether the data is used in the right way or the wrong way.”

Caruana had recently worked on a pneumonia risk model that determined a pneumonia patient’s risk of dying from the disease, and therefore who should be admitted to the hospital. On the basis of the patient data, the model found that those with a history of asthma have a lower risk of dying from pneumonia, when in fact asthma is a well-known risk factor of pneumonia. The model had made that prediction based on the fact that patients with asthma get access to healthcare faster, and therefore concluded that they have a decreased mortality risk.

The misconception that accuracy must be sacrificed for interpretability has allowed companies to market and sell proprietary black-box models for high-stakes decisions when simple interpretable models exist for the same tasks.

“Consumers are vastly more aware of these issues especially as they entangle with data privacy concerns,” said Ayodele Odubela, a data science career coach and responsible AI educator with Kforce at Microsoft. “My organization, Ethical AI Champions, is working on educational tools for engineers in high-risk development teams as well as taking over the marketing aspect of AI products to speak more accurately about algorithm limitations and setting the right expectations for customers and consumers.”

The explainable AI solution

Explainable AI, often abbreviated as ‘XAI,’ is a blanket term for the mainstreaming of interpretable algorithms whose computations can be understood by its creators and users, like showing your work in a math problem.

“I think there are two layers to explainable AI,” said Cossette. “There’s the interpretability of the algorithm itself–which is, why did this thing spit out this answer? Then there are the deep layers and the training data, and how those two things affect the outcome.”

One way to advocate for transparency in machine learning is by only using algorithms that are interpretable. Functions like linear regression, logistic regression, decision trees, and other linear regression extensions can be understood by mathematical formulas and the weights (coefficients) of the variables.

For example, let’s take a hypothetical situation where a business uses a linear regression equation to determine starting salary for its junior employees based on years of work experience and GPA. The equation would look like this:

Salary= W1*experience + W2*GPA

We have an interpretable linear equation where the weighting can tell us which variable is the stronger determinant of salary–experience or GPA. Decision trees are also interpretable because we can see how decisions are being taken starting from the root node to the leaf node. However, models become harder to interpret when training a deep decision tree for a depth of eight or nine, where there are too many decision rules to present effectively in a flow diagram. In this case, you can use feature importance to interpret the importance of each feature at a global level.

While convolutional neural networks (CNN)–used for things like object classification and detection–were once unassailable enigmas due to having hundreds of convolutional layers, where each layer learns filters of increasing complexity, researchers are discovering ways to make them interpretable. One way to do this when building a CNN for image recognition is to train the filters to recognize object parts rather than simple textures–thereby giving the model a clearly defined set of object features to recognize. In the example given by the researchers, a cat would be recognized by its feline facial features rather than its paws, ears or snout, which are present in other animals. This would more closely mimic how humans recognize and classify objects–the ultimate goal of computer vision.

“Algorithms find things in images that the human eye doesn’t find because it can measure the pixel value to extremely high precision, and so it sees some slight pattern in all the pixels,” said Lane. “You have to be really careful about randomizing not only how you generate data, but how you create and label the data–especially with a neural network.”

Interpretable models are important because people need to be able to trust algorithms that make decisions on their behalf. From an ethical standpoint, fairness is a big part of the equation. AI is only as good as the data it’s trained on, and datasets are often fraught with biases for two reasons: the data points reflect real-world conditions of racial and gender discrimination, or, the people who create the algorithms don’t represent the diverse interests and viewpoints of the general population.

In 2018, Amazon scrapped its in-house applicant tracking system because it didn’t like resumes containing the word “women,” so if an applicant listed “mentoring women entrepreneurs” as one of their achievements, they were out of luck. The system had been trained to vet applicants by observing patterns in resumes submitted to the company over a 10-year period, the majority of which had come from men—a reflection of the female talent shortage in the tech industry.

Datasets can also become less accurate over time as social and political circumstances evolve, causing the algorithm’s accuracy to erode due to data obsolescence.

Katie He, a student enrolled in Springboard’s Machine Learning Engineering Career Track, recently encountered this problem while building a machine learning model that would colorize footage using training data from a TV show that aired in the 1970s.

“The skin tone for the actors and actresses was quite homogeneous in the training set because it is a show from the seventies after all,” said He. “So if the model were to colorize footage from a newer TV show, everybody’s face would have the same skin tone, which might not be appropriate.”

Interpretability is necessary except in situations where the algorithmic decision does not directly impact the end-user, such as algorithms that refine internal business processes. Examples include algorithms that perform sentiment analysis to classify call recordings or use machine learning to track mentions of the brand on social media.

Algorithmic auditing and the future of transparency in machine learning

Third parties perform audits to evaluate a business’s regulatory compliance, environmental impact, and process efficiency in order to protect investors from fraud–but the algorithms that directly impact people’s lives are rarely audited. Some companies perform their own audits by evaluating algorithms for bias before and after production, asking questions like: “Is this sample of data representative of reality?” or “Is the algorithm suitably transparent to end-users?”

Bias can appear at so many different junctures of the development pipeline, from data wrangling to training the model, making it necessary to develop strategies to evaluate each aspect of a model for undue sources of influence. Another important aspect to consider is how the model’s accuracy will erode over time as real-world conditions evolve.

“In general, every model will decrease in accuracy over time,” said Cossette. “The nature of the world is, data is always changing, things are always changing. So your models will always continue to get worse. So how are you monitoring and managing them?”

Some companies in the AI governance space are contracted by other companies to find and rectify bias in algorithms. Basis AI, a Singapore-based consultancy for responsible AI, serves as a cyber auditor of AI algorithms by making their decision-making processes more transparent and helping data scientists understand when an algorithm might be biased. The company recently partnered with Facebook for the Open Loop initiative to mentor 12 startups in the Asia-Pacific region to prototype ethical and efficient algorithms.

There is a growing field of private auditing firms that review algorithms on behalf of other companies, particularly those that have been criticized for biased outcomes, but it’s unclear if these efforts are truly altruistic or simply done for PR purposes.

Recently, a high-profile case of algorithmic bias put the importance of algorithmic auditing in the spotlight. HireVue, a hiring software company that provides video interview services for major companies like Walmart and Goldman Sachs, was accused of having a biased algorithm, which evaluates people’s facial expressions as a proxy for forecasting job performance. The auditing firm didn’t turn up any biases in the algorithm, according to a press release from HireVue. However, HireVue was accused of using the audit as a PR stunt because the audit only examined a hiring test for early-career candidates, rather than its candidate evaluation process as a whole.

Considering how influential GDPR has been on privacy-focused initiatives, it wouldn’t be surprising to see laws that mandate algorithmic placed on businesses in developed countries that already have strong data protection laws.

“We need to insist on addressing power imbalances and how we can implement AI in more responsible ways within all types of businesses, from startups to enterprises,” said Odubela.

Since you’re here…

Thinking about a career in data science? Enroll in our Data Science Bootcamp, and we’ll get you hired in 6 months. If you’re just getting started, take a peek at our foundational Data Science Course, and don’t forget to peep our student reviews. The data’s on our side.

![87 Data Science Interview Questions [2022 Prep Guide]](https://www.springboard.com/blog/wp-content/uploads/2018/11/87-data-science-interview-questions-2022-prep-guide-380x235.png)