Scaling Machine Learning: How to Train a Very Large Model Using Spark

In this article

We often use libraries like Pandas and Scikit-Learn to preprocess data and train our machine learning models for personal projects or competitions on platforms like Kaggle.

However, when we deal with big data in the real world, we need an approach that can leverage many CPUs or GPUs to do data processing and, for training, machine learning models. That’s where Apache Spark comes in.

In this article, we will discuss Apache Spark and its hands-on implementation using the Python-compatible PySpark.

*Looking for the Colab Notebook for this post? Find it right here.*

What is Apache Spark, and what are its benefits?

Apache Spark is a distributed, general-purpose computing framework. It is open-source software originally developed in AMPLab at the University of California Berkeley in 2009.

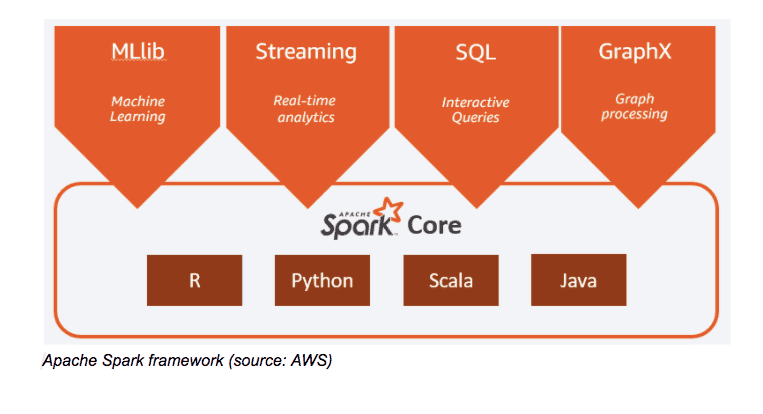

After its initial release in 2010, Spark increased in popularity among many industries and since then has grown significantly by the worldwide developer community. Spark supports development APIs in many programming languages such as Scala, Java, Python (most widely used programming language for data science), and R.

Apache Spark’s primary purpose was to address the limitations of Hadoop MapReduce. Spark reads data into memory, performs necessary operations, and writes results back—this allows for fast processing time, as opposed to MapReduce where each iteration requires disk read and write. Spark also uses in-memory caching for data reuse that makes it much faster than MapReduce. It is also quite popular among data scientists because to its speed, scalability, and simplicity of usage.

Some benefits of Apache Spark are:

- It is fast and can process and query data of any size

- It is developer-friendly due to the support provided in many programming languages like Java, Python, Scala, and R

- It can handle multiple workloads like machine learning (Spark MLlib), interactive queries (Spark SQL), graph processing (Spark GraphX), and real-time analytics (Spark Streaming)

Different ML and deep learning frameworks built on Spark

There are many machine learning and deep learning frameworks developed on top of Spark including the following:

- Machine learning frameworks on Spark: Apache Spark’s MLlib, H2O.ai’s Sparkling Water, etc.

- Deep learning frameworks on Spark: Elephas, CERN’s Distributed Keras, Intel’s BigDL, Yahoo’s TensorFlowOnSpark, etc.

Get To Know Other Data Science Students

George Mendoza

Lead Solutions Manager at Hypergiant

Peter Liu

Business Intelligence Analyst at Indeed

Bret Marshall

Software Engineer at Growers Edge

Machine learning using Spark MLlib

MLlib is a machine learning library included in the Spark framework. It was developed to do machine learning at scale with ease. Below are some tools provided as part of MLlib:

- Machine learning algorithms: Regression, classification, clustering, collaborative filtering, etc.

- Featurization: Feature selection, extraction, dimensionality reduction, transformation etc.

- Pipelines: Construction, evaluation, and tuning of machine learning pipelines

- Persistence: Saving and loading models and pipelines

In this section, we will build a machine learning model using PySpark (Python API of Spark) and MLlib on the sample dataset provided by Spark. We will use the Google Colab platform, which is similar to Jupyter notebooks, for coding and developing machine learning models as this is free to use and easy to set up. For real big data processing and modeling, one can use platforms like Databricks, AWS EMR, GCP Dataproc, etc.

The dataset under consideration might look very small, as we talked about big data, but the code we are developing here can be used seamlessly with large datasets hosted on S3, HDFS, Redshift, Cassandra, Couchbase, etc. We are considering this dataset to explain the working of PySpark, MLlib, and some basic concepts.

The code for this tutorial with a detailed explanation can be found here.

Since you’re here…

Thinking about a career in data science? Enroll in our Data Science Bootcamp, and we’ll get you hired in 6 months. If you’re just getting started, take a peek at our foundational Data Science Course, and don’t forget to peep our student reviews. The data’s on our side.